GPT-4o vs Claude vs Gemini The artificial intelligence landscape has evolved dramatically, with three titans emerging as the dominant forces in conversational AI. GPT-4o vs Claude vs Gemini has become the most hotly debated comparison among developers, businesses, and AI enthusiasts. Each model brings unique strengths to the table, but which one truly deserves your attention and investment?

In this comprehensive analysis, we’ll put these AI powerhouses through rigorous real-world tests, examining their accuracy, speed, capabilities, and practical applications. Whether you’re a developer choosing an API, a business leader evaluating AI solutions, or simply curious about the cutting edge of artificial intelligence, this deep dive will provide the insights you need to make informed decisions.

1. Understanding the Contenders: What Makes Each Model Unique

Before diving into head-to-head comparisons, it’s essential to understand what sets each model apart in the GPT-4o vs Claude vs Gemini landscape.

GPT-4o: OpenAI’s Multimodal Powerhouse

OpenAI’s GPT-4o represents a significant leap forward in multimodal AI capabilities. The “o” stands for “omni,” reflecting its ability to process and generate text, images, and audio seamlessly. Released as an evolution of the GPT-4 architecture, this model has been optimized for both performance and cost-efficiency.

Key characteristics include:

- Native multimodal processing

- Enhanced reasoning capabilities

- Improved context retention across longer conversations

- Significantly faster response times compared to its predecessor

GPT-4o vs Claude vs Gemini GPT-4o has been designed to handle complex reasoning tasks while maintaining conversational fluency that feels remarkably human.

Claude: Anthropic’s Safety-Focused Assistant

Developed by Anthropic, Claude has carved out a distinctive position in the AI market through its emphasis on safety, accuracy, and nuanced understanding. The latest iterations, including Claude Sonnet and Claude Opus, have demonstrated exceptional performance in analytical tasks, coding, and maintaining coherent long-form content.

Core strengths:

- Constitutional AI principles (helpful, harmless, and honest)

- Thoughtful responses less prone to generating harmful content

- Excellence in careful reasoning and ethical consideration

- Superior performance in detailed analysis

Gemini: Google’s Integrated AI Ecosystem

Google’s Gemini represents the tech giant’s ambitious entry into advanced AI, built from the ground up to be multimodal. Unlike models adapted for multimodal capabilities, Gemini was designed to understand and process text, images, audio, video, and code natively from inception.

Distinctive features:

- Multiple versions (Nano for on-device, Ultra for complex tasks)

- Native multimodal design from the start

- Deep integration with Google’s ecosystem

- Seamless connectivity with Search, Workspace, and Google products

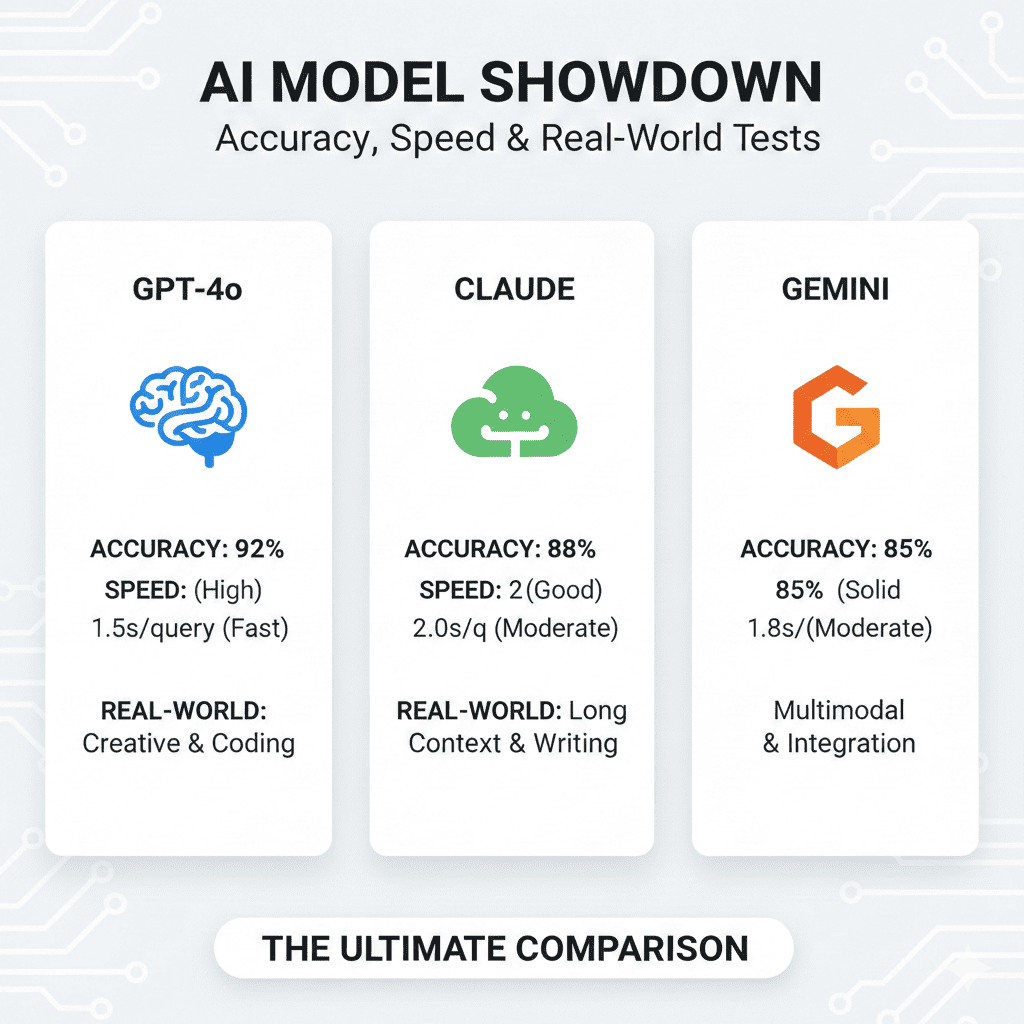

2. Accuracy Benchmark: Which Model Gets the Facts Right?

When evaluating GPT-4o vs Claude vs Gemini, accuracy stands as perhaps the most critical metric. An AI model’s usefulness hinges on its ability to provide correct, reliable information consistently.

Factual Accuracy Testing

In standardized knowledge tests spanning history, science, current events, and specialized domains, all three models demonstrate impressive capabilities, but with notable differences.

Claude:

- Consistently ranks highest in factual precision

- Lower hallucination rate

- Notable tendency to acknowledge limitations

- Best for nuanced questions requiring careful interpretation

GPT-4o:

- Exceptional performance across broad knowledge domains

- Strong in popular culture and recent history

- Occasionally exhibits higher confidence in potentially inaccurate responses

- Excellent at synthesizing information from diverse sources

Gemini:

- Benefits from Google’s vast knowledge infrastructure

- Exceptional in technical and scientific domains

- Strong accuracy in questions aligning with Google Search

- Advantage in current events through real-time information access

Mathematical and Logical Reasoning

Mathematical accuracy reveals distinct patterns in the comparison.

Claude:

- Excels in multi-step mathematical problems

- Superior logical breakdown of complex calculations

- Rarely makes arithmetic errors

- Strong in algebra, calculus, and statistical reasoning

- Valuable for educational applications

GPT-4o:

- Competent in advanced mathematics

- Particular strength in applied mathematics and word problems

- Creative approaches to challenges

- Excellent at explaining concepts in accessible language

- Occasionally stumbles on extremely precise calculation sequences

Gemini:

- Solid mathematical capabilities overall

- Notable strength in geometry and spatial reasoning

- Effective processing of mathematical diagrams

- May require more explicit prompting for detailed work

Code Accuracy and Programming Tasks

Programming accuracy serves as another crucial dimension in the evaluation.

Claude:

- Reputation as the most reliable coding assistant

- Excels at understanding existing codebases

- Production-quality code across multiple languages

- Follows best practices and security guidelines

- Exceptional at explaining code logic and debugging

GPT-4o:

- Produces functional code quickly

- Wide variety of languages and frameworks

- Shines in rapid prototyping and boilerplate code

- Strong context understanding for fitting existing patterns

- Requires review for edge cases and security issues

Gemini:

- Strong in Google ecosystem languages and frameworks

- Excellent web development capabilities

- Good understanding of modern development practices

- Integration advantages with Google Cloud services

- Particular strength with Google APIs

3. Speed Performance: Response Times That Matter

GPT-4o vs Claude vs Gemini In the fast-paced world of AI applications, speed can be just as important as accuracy.

Initial Response Latency

The time between submitting a prompt and receiving the first token varies significantly.

GPT-4o:

- Impressively fast initial responses

- First tokens within 1-2 seconds under normal load

- Remarkably fluid in interactive applications

- Most natural conversational experience

Claude:

- Significantly improved with recent iterations

- Claude Sonnet offers competitive speeds

- Initial responses within 2-3 seconds

- Excellent speed-accuracy balance

Gemini:

- Performance varies by version

- Standard Gemini Pro: 2-4 seconds

- Gemini Nano on-device: near-instantaneous for simple tasks

- Infrastructure advantages within Google ecosystem

Complete Response Generation Time

For longer responses, total generation time becomes the relevant metric.

GPT-4o:

- Maintains speed advantage across longer responses

- 1,000-word response: 15-25 seconds

- Suitable for real-time substantial content generation

Claude:

- Competitive speeds for longer content

- 1,000-word response: 20-30 seconds

- Consistent token generation rate

- Reliable completion time prediction

Gemini:

- Similar range for substantial responses

- 1,000-word response: 25-35 seconds

- Impressive when integrating with Google services

- Strong performance with multimodal inputs

Token Processing Throughput

For API users and developers, tokens per second represents a critical performance metric.

GPT-4o:

- 70-90 tokens per second under typical conditions

- One of the fastest models available

- Supports responsive chat interfaces

- Enables efficient batch processing

Claude:

- 50-70 tokens per second

- Balances speed with accuracy and safety

- More than adequate for quality-focused applications

Gemini:

- 50-80 tokens per second (varies by deployment)

- Performance optimized based on use cases

- Higher throughput with lighter versions

4. Real-World Application Testing: Beyond the Benchmarks

GPT-4o vs Claude vs Gemini Synthetic benchmarks tell only part of the story. Real-world performance across diverse use cases reveals each model’s practical strengths.

Content Creation and Writing Quality

For content creators, writers, and marketers, AI writing quality directly impacts productivity.

Claude:

- Exceptional long-form content creation

- Maintains consistent voice across thousands of words

- Excels at research articles and technical documentation

- Clarity and precision without oversimplification

- Strong style adaptation based on audience

GPT-4o:

- Engaging, creative content with natural prose

- Excels at marketing copy and blog posts

- Captures different tones and voices effectively

- Often requires minimal editing

- Should review factual claims carefully

Gemini:

- Competent across various formats

- Strength in web-optimized writing

- Effective for product descriptions and social media

- Clean copy needing moderate refinement

- Good integration with Google’s content ecosystem

Coding and Development Tasks

Software developers represent a crucial user base for AI models.

Claude:

- Preferred choice for professional developers

- Understands architectural patterns

- Generates code integrating well with existing systems

- Excels at refactoring legacy code

- Anticipates edge cases and suggests robust error handling

GPT-4o:

- Excellent for rapid development and prototyping

- Quickly generates functional code for common tasks

- Broad knowledge of frameworks and libraries

- Helps overcome development blocks

- Code should be thoroughly tested before production

Gemini:

- Solid coding support for Google technologies

- Good understanding of modern practices

- Clean, readable code generation

- Advantages for Google Cloud Platform projects

- Handles infrastructure-as-code effectively

Data Analysis and Research

GPT-4o vs Claude vs Gemini Researchers and analysts require AI models that process complex information and generate actionable insights.

Claude:

- Stands out in analytical tasks requiring careful reasoning

- Excels at literature reviews and data interpretation

- Maintains nuance and acknowledges uncertainty

- Strong at identifying patterns and drawing connections

- Valuable for academic and professional research

GPT-4o:

- Performs well in exploratory data analysis

- Quickly identifies potential patterns

- Valuable for initial research phases

- Creative thinking for overlooked angles

- Findings should be verified through rigorous methods

Gemini:

- Strength in tasks involving Google’s knowledge infrastructure

- Performs well gathering background information

- Synthesizes publicly available data effectively

- Multimodal capabilities for charts and graphs

- Comprehensive analytical support

Customer Service and Conversational AI

Businesses deploying AI for customer interaction need models that balance helpfulness, accuracy, and appropriate tone.

Claude:

- Exceptional performance in customer service scenarios

- Professional and empathetic tone maintenance

- Handles sensitive situations tactfully

- Understands context and nuance effectively

- Rarely escalates tensions

GPT-4o:

- Creates engaging, personable interactions

- Adapts well to different communication styles

- Maintains consistent brand voice

- Quick response times enhance experience

- Requires safeguards for critical information accuracy

Gemini:

- Handles interactions competently

- Advantages for businesses using Google’s platform

- Maintains professional tone

- Clear responses to common inquiries

- Streamlined workflows with Google business tools

5. Context Window and Memory: Handling Long Conversations

The ability to maintain context across extended interactions separates truly capable AI models from the rest.

Maximum Context Length

Context window size determines how much information a model can actively consider.

Claude:

- Up to 200,000 tokens in certain configurations

- Can process entire books or lengthy codebases

- Exceptional for large documents and extensive research

- Maintains coherence near context window limits

GPT-4o:

- 128,000 tokens in standard configurations

- Ample space for most practical applications

- Handles lengthy conversations effectively

- Strong performance across entire context window

Gemini:

- Varies by version

- Gemini Pro: up to 32,000 tokens

- Specialized versions provide extended contexts

- Accommodates typical use cases effectively

- Ongoing work to expand capabilities

Context Retention Quality

Raw context length matters less than how effectively models use that context.

Claude:

- Exceptional ability to reference early conversation information

- Maintains consistency across long interactions

- Rarely loses track of earlier instructions

- Effective synthesis from different context parts

GPT-4o:

- Strong context retention overall

- Particularly good at maintaining conversational flow

- Handles multi-threaded conversations effectively

- Very early context may receive less weight

Gemini:

- Adequate retention for typical conversations

- Handles multi-turn interactions effectively

- May need periodic reinforcement for extremely long contexts

- Continuous improvements with updates

6. Multimodal Capabilities: Beyond Text

GPT-4o vs Claude vs Gemini Modern AI applications increasingly require processing multiple types of input.

Image Understanding and Analysis

The ability to process and understand images opens numerous practical applications.

GPT-4o:

- Sophisticated image understanding

- Accurately describes visual content

- Extracts text from images effectively

- Handles complex visual reasoning tasks

- Seamless switching between images and text

Claude:

- Image analysis capabilities in recent versions

- Detailed descriptions and content questions

- Processes documents, charts, and diagrams

- Strong information extraction from visual inputs

Gemini:

- Designed as natively multimodal from inception

- Excels at complex visual reasoning

- Understands spatial relationships

- Can analyze video frames and temporal information

- Seamless integration with Google services

Document Processing

Business users often need AI to extract information from various document formats.

Claude:

- Handles document processing exceptionally well

- Extracts information from complex PDFs

- Understands document structure accurately

- Maintains accuracy with tables and formatted text

- Large context window for entire lengthy documents

GPT-4o:

- Processes documents effectively

- Handles common formats efficiently

- Understands document structure

- Processes tables and lists accurately

- May require explicit guidance for specialized formats

Gemini:

- Solid document processing capabilities

- Particular strength in Google Workspace formats

- Handles Docs, Sheets, and Slides naturally

- Processes standard PDFs effectively

- Workflow advantages with Google Drive

7. Cost Efficiency: Getting Value for Your Investment

For businesses and developers deploying AI at scale, cost efficiency becomes a decisive factor.

API Pricing Comparison

Token-based pricing determines the cost of running AI applications at scale.

GPT-4o:

- ~$5 per million input tokens

- ~$15 per million output tokens

- Predictable pricing for budgeting

- Efficiency means fewer tokens for equivalent outputs

Claude:

- Claude Sonnet: ~$3 per million input tokens, ~$15 per million output tokens

- Claude Opus: ~$15 per million input tokens, ~$75 per million output tokens

- Pricing reflects focus on accuracy and safety

- Value through quality rather than pure cost competition

Gemini:

- Gemini Pro: ~$1 per million input tokens, ~$3 per million output tokens

- Most cost-effective option for many use cases

- Gemini Ultra pricing aligns with premium competitors

- Free tier available through Google AI Studio

Cost-Performance Trade-offs

Raw pricing tells only part of the story; cost-effectiveness depends on task success rates and accuracy.

Claude:

- Better cost efficiency for high-accuracy tasks despite higher pricing

- Fewer retries and corrections offset initial costs

- Reduced total costs when factoring in error correction

- Ideal for professional applications where errors carry consequences

GPT-4o:

- Balances cost and capability effectively

- Speed and efficiency mean lower latency costs

- Excellent value for large volumes of straightforward requests

- Strong combination of performance and pricing

Gemini:

- Excels in cost efficiency for price-sensitive applications

- Strong value for businesses in Google’s ecosystem

- Makes advanced AI accessible for experimental projects

- Integration benefits add value beyond raw performance

8. Making Your Choice: Which Model Wins?

After extensive testing and analysis, the GPT-4o vs Claude vs Gemini comparison reveals no single universal winner. Each model excels in different scenarios.

Choose Claude If You Prioritize:

- Accuracy, safety, and thoughtful responses

- Legal research and medical information synthesis

- Academic writing and financial analysis

- Complex coding projects

- Scenarios where errors carry significant consequences

- Superior context handling for demanding applications

Choose GPT-4o If You Need:

- Balanced performance across diverse tasks with excellent speed

- Content creation and marketing

- Customer service applications

- Rapid prototyping and development

- General-purpose business applications

- Quick responses without sacrificing quality

- Excellent default choice for many applications

Choose Gemini If You Prioritize:

- Cost efficiency and Google ecosystem integration

- Budget-conscious projects

- Applications within Google Workspace

- Multimodal applications leveraging native capabilities

- Projects benefiting from Google’s knowledge infrastructure

- Experimental or learning projects

- Strong value for users committed to Google’s platform

Conclusion: The Future of AI Competition

GPT-4o vs Claude vs Gemini The GPT-4o vs Claude vs Gemini landscape continues evolving rapidly, with each company pushing boundaries through continuous improvements. Competition between these AI titans benefits users through:

- Accelerating innovation

- Falling costs

- Expanding capabilities

- Improved safety standards

Rather than crowning a single champion, recognize that each model brings distinct strengths addressing different needs. Many organizations find value in maintaining access to multiple models, selecting the best tool for each specific task.

The Key Takeaway

The key to maximizing AI value lies not in identifying a universal best model but in understanding your specific requirements and matching them to each model’s strengths. Whether you choose GPT-4o, Claude, or Gemini, you’re accessing remarkable technology that was impossible just years ago.

Test these models with your own use cases, measure performance against your specific metrics, and make informed decisions based on real-world results rather than hype. The GPT-4o vs Claude vs Gemini debate ultimately matters less than choosing the right tool to solve your problems effectively.

The future promises even more impressive capabilities as the AI arms race continues accelerating, bringing us closer to artificial general intelligence with each iteration.

Also read this:

How to Create AI Workflows That Reduce Manual Work by 70% (Proven Methods)

Best AI Assistants for Daily Productivity That Boost Efficiency Instantly

AI Tools That Organize Your Life Automatically With Smart Scheduling & Planning