The artificial intelligence revolution runs on silicon. As AI models grow exponentially more complex and computationally demanding, the race to build faster, more efficient hardware has intensified dramatically. In 2025, AI hardware benchmarks have become the definitive metric for evaluating performance across training, inference, and deployment scenarios.

This comprehensive guide ranks the fastest chips, GPUs, and cloud platforms powering today’s AI applications. Whether you’re a researcher training large language models, an enterprise deploying computer vision systems, or a developer choosing infrastructure for your AI startup, understanding current AI hardware benchmarks is essential for making informed decisions.

From NVIDIA’s latest GPU architectures to emerging competitors, from specialized AI accelerators to cloud-based solutions, we’ll examine the performance metrics that matter and reveal which hardware delivers the best results for different workloads.

1. Understanding AI Hardware Performance Metrics

Before diving into specific rankings, understanding how AI hardware benchmarks measure performance is crucial for interpreting results and choosing appropriate hardware.

Core Performance Indicators

FLOPS (Floating Point Operations Per Second) represents raw computational throughput. Modern AI accelerators deliver performance measured in petaFLOPS, with the fastest chips exceeding one exaFLOP for specific precision formats. However, peak FLOPS often differs significantly from sustained performance in real-world applications.

Tensor Core performance specifically measures operations optimized for matrix multiplication, the fundamental computation in neural networks. This metric matters more than general FLOPS for deep learning workloads.

Memory bandwidth determines how quickly data moves between processors and memory. AI models with billions of parameters require enormous bandwidth to avoid bottlenecks. Top-tier accelerators deliver over 3 TB/s memory bandwidth through specialized high-bandwidth memory architectures.

Latency measures response time for inference requests. Low-latency hardware enables real-time applications like autonomous vehicles, robotics, and interactive AI assistants. Inference latency varies from microseconds for small models to seconds for large language models.

Benchmark Suites and Standards

MLPerf has emerged as the industry-standard benchmark suite for AI hardware. It includes separate benchmarks for training and inference across various models including image classification, object detection, language processing, and recommendation systems. MLPerf results provide the most reliable cross-platform comparisons.

SPEC ML benchmarks focus on inference performance using standardized models and datasets. These benchmarks emphasize real-world deployment scenarios rather than peak theoretical performance.

Custom benchmarks tailored to specific applications often reveal performance characteristics hidden by standard tests. Large language model benchmarks measure tokens per second, time to first token, and throughput at various batch sizes.

Efficiency Metrics

Performance per watt measures computational efficiency, critical for data centers managing massive electricity costs and cooling requirements. The most efficient accelerators deliver over 1,000 TFLOPS per watt for AI workloads.

Performance per dollar provides the ultimate metric for commercial viability. The fastest hardware means little if costs prohibit adoption. Cloud platform pricing based on hourly rates directly reflects this efficiency metric.

Total cost of ownership includes hardware acquisition, power consumption, cooling infrastructure, and maintenance over the system’s operational lifetime. This holistic view often reveals that mid-tier hardware with better efficiency delivers superior long-term value.

2. Top GPU Rankings for AI Training

Graphics processing units remain the dominant hardware for training large AI models. Current AI hardware benchmarks show NVIDIA maintaining leadership while competition intensifies.

NVIDIA H100 and H200: Current Champions

The NVIDIA H100 Tensor Core GPU delivers unprecedented AI training performance. With 80 billion transistors and fourth-generation Tensor Cores, the H100 achieves 1,000 teraFLOPS for FP8 precision commonly used in AI training. Memory bandwidth reaches 3 TB/s through HBM3 memory technology.

MLPerf training benchmarks show the H100 training GPT-3 style models up to 3.5 times faster than the previous generation A100. ResNet-50 image classification completes in under 15 minutes on an 8-GPU system compared to nearly an hour on A100 systems.

The H200, released in late 2024, extends H100 architecture with 141 GB of HBM3e memory versus 80 GB on H100. This expanded memory enables training larger models without distributed systems, crucial for research applications pushing model size boundaries.

NVLink 4.0 interconnect technology enables 900 GB/s bidirectional bandwidth between GPUs, allowing near-linear scaling when training across multiple accelerators. Systems with 256 H100 GPUs can train models with trillions of parameters.

NVIDIA L40S and L4: Versatile Performers

The L40S targets workloads requiring both AI computation and graphics capabilities. With 48 GB memory and strong FP8 performance, it serves as a cost-effective option for organizations running mixed workloads.

The L4 provides efficient inference and lighter training workloads at significantly lower power consumption. At 72 watts TDP, L4 systems reduce operational costs while delivering respectable performance for smaller models.

AMD MI300X: The Rising Challenger

AMD’s MI300X represents the most significant challenge to NVIDIA’s dominance in recent years. With 192 GB of HBM3 memory—more than any NVIDIA offering—the MI300X excels at training extremely large models that would require multiple NVIDIA GPUs.

Benchmark results show MI300X competitive with H100 in many training scenarios, particularly for models that benefit from larger per-GPU memory. Training throughput for large language models approaches H100 performance while offering better memory capacity.

AMD’s ROCm software ecosystem has matured significantly, though it still lags NVIDIA’s CUDA in terms of framework support and optimization. Organizations willing to invest in ROCm integration can achieve excellent price-performance ratios.

Intel Gaudi2 and Gaudi3: Open Alternative

Intel’s Gaudi accelerators pursue an open-ecosystem strategy without proprietary software lock-in. Gaudi2 delivers competitive training performance at attractive pricing, particularly for language models.

Early Gaudi3 benchmarks suggest performance approaching H100 levels with improved efficiency. Intel’s strategy of building Gaudi around standard networking and open software appeals to organizations seeking vendor diversity.

However, software maturity remains a concern. Framework support is growing but doesn’t match NVIDIA’s comprehensive ecosystem. Organizations must carefully evaluate whether their specific models and frameworks perform optimally on Gaudi hardware.

3. Inference Accelerators: Specialized Performance Leaders

While training demands raw power, inference optimization requires different architectural tradeoffs. AI hardware benchmarks for inference emphasize latency, throughput, and efficiency over peak FLOPS.

NVIDIA L4 and L40: Inference Optimized

NVIDIA’s L-series GPUs prioritize inference workloads with optimized tensor cores, efficient power consumption, and competitive pricing. The L4 delivers over 300 TOPS INT8 performance while consuming only 72 watts, making it ideal for edge deployment and cost-sensitive inference applications.

MLPerf inference benchmarks show L4 completing ResNet-50 image classification at 14,000 queries per second with sub-millisecond latency. BERT language model inference achieves 3,500 queries per second, excellent for production deployment.

The L40 provides higher throughput for data center inference, processing over 25,000 ResNet-50 images per second. Its 48 GB memory handles large language models that exceed typical inference accelerator capacity.

Google TPU v5: Purpose-Built Excellence

Google’s fifth-generation Tensor Processing Unit demonstrates what purpose-built AI hardware achieves. Optimized specifically for TensorFlow and JAX workloads, TPU v5p delivers over 459 TFLOPS of bfloat16 performance with exceptional efficiency.

TPU v5e variants target cost-optimized inference with performance sufficient for most production workloads at significantly lower pricing than v5p. Google’s infrastructure integrates TPUs seamlessly with their cloud services, providing excellent developer experience.

However, TPU availability remains limited to Google Cloud Platform. Organizations committed to other cloud providers or on-premises deployment cannot access TPU hardware, limiting its applicability despite impressive performance.

AWS Inferentia2 and Trainium: Cloud-Native Innovation

Amazon’s custom silicon demonstrates the cloud provider trend toward proprietary accelerators. Inferentia2 delivers high-throughput inference at compelling price points compared to GPU-based alternatives.

Benchmarks show Inferentia2 processing natural language models 4x faster than previous generation Inferentia with 10x better efficiency. Image model inference achieves over 12,000 images per second for ResNet-50, competitive with NVIDIA’s L-series.

Trainium targets training workloads with performance approaching GPU solutions at lower cost. Early adopters report 40-50% cost savings for training large language models compared to GPU instances, though model compatibility requires careful evaluation.

AWS’s Neuron SDK provides framework support for PyTorch and TensorFlow, but migrating models to Inferentia requires optimization work. Organizations must weigh potential cost savings against integration effort.

Qualcomm Cloud AI 100: Edge Inference Leader

Qualcomm’s Cloud AI 100 optimizes for edge and embedded inference applications. Despite the “cloud” name, this accelerator excels in edge deployments where power efficiency and small form factor matter.

Delivering 400 TOPS at under 75 watts, Cloud AI 100 achieves impressive performance per watt for edge applications. Computer vision models process 1080p video streams with single-digit millisecond latency, enabling real-time applications.

The accelerator supports precision formats from INT4 to FP16, allowing developers to balance accuracy against performance for specific applications. Extensive automotive, robotics, and industrial edge deployments demonstrate production readiness.

4. CPU Performance for AI Workloads

While accelerators dominate training and inference, CPUs remain crucial for preprocessing, orchestration, and certain AI workloads. Modern AI hardware benchmarks include CPU performance for complete system evaluation.

Intel Xeon Sapphire Rapids: AI Acceleration Built-In

Intel’s 4th Generation Xeon processors integrate AI acceleration through Advanced Matrix Extensions (AMX). These specialized instructions accelerate matrix operations common in AI without requiring separate accelerators.

Benchmarks show AMX delivering up to 3x faster inference for quantized INT8 models compared to previous Xeon generations. Deep learning frameworks like PyTorch and TensorFlow automatically leverage AMX when available, providing performance improvements without code changes.

For organizations running mixed workloads combining traditional computing with AI inference, Sapphire Rapids CPUs eliminate the need for separate inference accelerators while simplifying deployment and management.

AMD EPYC Genoa and Bergamo: High-Core-Count Efficiency

AMD’s EPYC processors deliver exceptional performance for AI data preprocessing and model serving. Genoa chips with up to 96 cores excel at parallel preprocessing pipelines that feed data to accelerators.

Bergamo variants optimize for cloud-native AI applications with up to 128 cores focused on efficiency rather than peak single-threaded performance. This architecture suits containerized AI inference deployments where high concurrent request handling matters more than per-request latency.

ARM-Based Alternatives: Graviton and Altra

AWS Graviton3 processors demonstrate ARM architecture viability for AI workloads. Benchmarks show competitive inference performance for computer vision and NLP models with superior power efficiency compared to x86 alternatives.

Ampere Altra processors target cloud-native AI applications with predictable per-core performance and strong efficiency. Organizations building inference services report 30-40% lower operational costs using Altra compared to equivalent x86 deployments.

5. Cloud Platform AI Infrastructure Rankings

For most organizations, cloud platforms provide the practical access point for cutting-edge AI hardware. AI hardware benchmarks translate into real-world performance and cost metrics on major cloud providers.

Google Cloud Platform: TPU Advantage

GCP’s exclusive access to TPU hardware provides unmatched performance for TensorFlow and JAX workloads. TPU v5p instances deliver the fastest training times for large language models among major cloud providers, reducing weeks-long training runs to days.

Pricing for TPU instances varies significantly from GPU equivalents. For compatible workloads, TPU training can cost 40-50% less than equivalent GPU instances. However, model compatibility requirements and framework limitations constrain TPU applicability.

GCP also offers comprehensive GPU options including NVIDIA H100, L4, and A100 instances. Flexible machine configurations and preemptible instances provide cost optimization opportunities for batch training workloads.

Amazon Web Services: Broadest Hardware Selection

AWS provides the widest variety of AI hardware options including NVIDIA GPUs, proprietary Inferentia2 and Trainium chips, and Habana Gaudi accelerators. This diversity enables optimization for specific workload requirements and budget constraints.

P5 instances featuring NVIDIA H100 GPUs deliver top-tier training performance. Benchmark results show training throughput competitive with other clouds while AWS’s mature infrastructure provides reliability and integration advantages.

Inf2 instances powered by Inferentia2 provide the most cost-effective inference for supported models. Organizations processing millions of inference requests daily report 60-70% cost reduction compared to GPU-based inference.

Microsoft Azure: Enterprise Integration Leader

Azure’s AI infrastructure emphasizes enterprise integration and hybrid deployment options. ND H100 v5 instances provide NVIDIA H100 access with deep integration into Azure’s AI services and tooling.

Azure Machine Learning’s managed infrastructure automatically selects appropriate hardware for specific workloads, abstracting hardware selection complexity. This approach suits organizations prioritizing developer productivity over hardware optimization.

Azure Arc enables hybrid deployments where training occurs in the cloud while inference runs on-premises, addressing data sovereignty and latency requirements common in enterprise scenarios.

Oracle Cloud Infrastructure: Price-Performance Focus

OCI has aggressively pursued AI workloads with compelling price-performance ratios. H100 instance pricing significantly undercuts competitors while delivering equivalent performance, particularly for bare-metal instances providing direct hardware access.

OCI’s ultra-low-latency RDMA networking between compute instances enables efficient distributed training scaling to hundreds of GPUs with minimal overhead. Organizations training very large models report excellent scaling efficiency.

However, OCI’s smaller ecosystem and fewer AI-specific managed services mean organizations must handle more infrastructure management themselves. The tradeoff between cost savings and operational complexity requires careful evaluation.

6. Specialized AI Hardware and Emerging Technologies

Beyond mainstream GPUs and cloud accelerators, specialized hardware addresses specific AI workload requirements highlighted in AI hardware benchmarks for niche applications.

Cerebras Wafer-Scale Engine: Massive Parallelism

Cerebras CS-3 system builds processors from entire silicon wafers rather than individual chips, creating the world’s largest processor with 900,000 AI-optimized cores. This architecture eliminates communication bottlenecks in distributed training.

Benchmarks demonstrate exceptional performance for large language model training. Models with hundreds of billions of parameters train in days rather than weeks, with near-perfect scaling efficiency that multi-GPU systems cannot match.

However, Cerebras systems require significant capital investment and specialized expertise. They suit research institutions and large AI companies pushing model size boundaries more than typical enterprise applications.

Graphcore IPU: Graph-Optimized Architecture

Graphcore’s Intelligence Processing Units optimize for graph neural networks and sparse model architectures. The IPU-POD architecture achieves excellent efficiency for these workloads compared to conventional GPUs.

Applications in drug discovery, recommendation systems, and financial modeling demonstrate IPU advantages. Organizations working with graph data structures report 3-5x training speedups compared to GPU implementations.

SambaNova DataScale: Reconfigurable Performance

SambaNova’s reconfigurable dataflow architecture adapts hardware topology to specific model architectures. This flexibility delivers consistent performance across diverse AI workloads without the optimization effort GPUs require.

Early enterprise deployments show strong results for natural language processing and computer vision applications. The system’s ability to handle multiple concurrent workloads efficiently suits organizations running diverse AI models.

Groq LPU: Inference Latency Champion

Groq’s Language Processing Unit architecture achieves record-breaking inference latency for large language models. Early benchmarks show LPU systems generating tokens 10x faster than GPU-based solutions with deterministic latency.

This predictable, ultra-low latency enables interactive AI applications previously impossible. Real-time translation, conversational AI, and code generation benefit enormously from LPU’s architectural advantages.

Limited availability and ecosystem immaturity currently constrain LPU adoption. As software support matures and deployment options expand, Groq’s technology may significantly impact inference-focused applications.

7. Edge AI Hardware: Performance Meets Efficiency

Edge deployment demands hardware balancing performance, power efficiency, and physical size. AI hardware benchmarks for edge applications emphasize TOPS per watt and thermal design power.

NVIDIA Jetson Orin: Developer Platform Leader

The Jetson Orin family delivers scalable edge AI performance from 10 TOPS in the Nano variant to 275 TOPS in the AGX Orin Industrial. This range covers applications from small IoT devices to autonomous vehicles.

Computer vision benchmarks show Orin processing multiple 4K camera streams simultaneously with sub-20ms latency. YOLO object detection runs at 60 FPS on 1080p video while consuming under 15 watts.

CUDA support and compatibility with desktop GPU development workflows make Jetson the easiest edge platform for developers familiar with NVIDIA’s ecosystem. Models developed on data center GPUs deploy to Jetson with minimal modification.

Intel Movidius and AI Boost: Integrated Efficiency

Intel’s Movidius VPUs integrate into devices ranging from drones to security cameras. The latest Movidius generations deliver 4-10 TOPS at under 2.5 watts, enabling battery-powered AI applications.

Intel AI Boost in Core Ultra processors brings neural processing to laptops and edge servers. Benchmark results show efficient inference for productivity AI features like background blur, image enhancement, and voice processing without impacting battery life.

Qualcomm Snapdragon and Hexagon DSP: Mobile AI Leader

Snapdragon platforms power billions of mobile devices with increasingly capable AI accelerators. The Hexagon NPU in flagship Snapdragon chips delivers over 45 TOPS, enabling sophisticated on-device AI without cloud dependence.

Language model inference on Snapdragon demonstrates impressive capabilities. Models with several billion parameters run locally on smartphones, enabling private, offline AI assistants and real-time translation.

Google Coral: Specialized Edge Inference

Google’s Coral Edge TPU provides purpose-built inference acceleration in compact form factors. USB accelerator modules add AI capability to existing systems, while system-on-module variants enable custom edge device development.

Benchmarks show Coral processing MobileNet image classification at 400 FPS while consuming only 2 watts. This efficiency enables battery-powered wildlife cameras, smart home devices, and industrial sensors with always-on AI capabilities.

8. Memory and Storage Technologies for AI

AI performance increasingly depends on memory bandwidth and storage speed rather than pure computational throughput. AI hardware benchmarks now include memory subsystem performance as critical metrics.

High Bandwidth Memory (HBM3 and Beyond)

HBM3 delivers 819 GB/s bandwidth per stack with lower power consumption than previous generations. The latest AI accelerators integrate multiple HBM3 stacks achieving total bandwidth exceeding 3 TB/s.

HBM3e pushes bandwidth to 1 TB/s per stack while increasing capacity to 36 GB per stack. This enables single-accelerator systems with 144 GB memory running models previously requiring distributed systems.

Future HBM4 technology promises 1.5 TB/s per stack with further capacity increases. Memory bandwidth growing faster than computational throughput suggests future AI performance increasingly determined by memory subsystems.

NVMe and Computational Storage

Training large models requires rapid dataset access. NVMe SSD arrays delivering millions of IOPS eliminate storage bottlenecks in data pipelines. Direct storage technology allows GPUs to access SSD data without CPU intervention, reducing latency and CPU overhead.

Computational storage devices with integrated processing accelerate data preprocessing. These devices perform filtering, decompression, and format conversion without moving data through system memory, improving overall pipeline efficiency.

Memory Pooling and Disaggregation

CXL (Compute Express Link) technology enables memory pooling across multiple accelerators. This allows flexible memory allocation matching workload requirements without static per-accelerator limits.

Disaggregated memory architectures separate compute and memory resources, enabling independent scaling. Organizations train larger models by adding memory without proportionally increasing expensive compute resources.

9. Performance Optimization and Hardware Selection Strategy

Understanding benchmarks matters less than applying that knowledge to select optimal hardware for specific requirements. This section translates AI hardware benchmarks into practical decision frameworks.

Workload Characterization

Training versus inference requirements dictate different hardware priorities. Training benefits from maximum computational throughput and memory capacity, justifying premium accelerator costs. Inference prioritizes throughput, latency, and efficiency, often achieving better ROI with mid-tier hardware.

Model architecture influences hardware selection significantly. Transformer models stress memory bandwidth and capacity more than computational throughput. Convolutional neural networks for computer vision utilize tensor cores efficiently, making them ideal GPU workloads.

Batch size capabilities determine throughput for inference applications. Large batch sizes maximize accelerator utilization but increase latency. Real-time applications require small batches despite lower efficiency, while offline batch processing benefits from large batches.

Cost Optimization Strategies

Reserved instances and committed use discounts reduce cloud computing costs 40-60% compared to on-demand pricing. Organizations with predictable workloads achieve substantial savings through commitment-based pricing.

Spot instances and preemptible VMs provide deep discounts for interruptible workloads. Training jobs with checkpointing handle interruptions gracefully, enabling 70-90% cost reduction for non-urgent training.

Hybrid strategies combining owned infrastructure for baseline workloads with cloud bursting for peak demand optimize total cost. Organizations train frequently-used models on-premises while leveraging cloud for experimental workloads.

Future-Proofing Considerations

Model size growth trends suggest memory capacity will increasingly constrain AI development. Hardware selection should prioritize memory capacity and bandwidth alongside computational performance.

Software ecosystem maturity determines hardware viability. NVIDIA’s CUDA ecosystem remains most mature, but alternative accelerators with open software stacks offer compelling price-performance if frameworks support them adequately.

Vendor lock-in risks deserve consideration. Proprietary cloud accelerators may offer short-term cost advantages but create long-term dependencies. Standard GPU instances provide flexibility to move workloads across providers and environments.

10. The Road Ahead: Next-Generation AI Hardware

Looking beyond current AI hardware benchmarks, emerging technologies promise revolutionary performance improvements and entirely new AI capabilities.

Next-Generation GPU Architectures

NVIDIA’s next-generation Blackwell architecture promises double the training performance of Hopper (H100) generation. Improved NVLink connectivity and expanded memory capacity will enable training even larger models.

AMD’s CDNA 4 architecture and Intel’s Falcon Shores represent increasing competition in the accelerator market. This competition benefits users through better performance, pricing, and innovation.

Optical Computing and Photonic AI

Optical computing leverages light instead of electricity for computation, promising orders of magnitude improvement in speed and efficiency. Several startups demonstrate optical neural networks processing AI workloads at the speed of light with near-zero latency.

While commercial deployment remains years away, photonic AI could transform inference applications requiring ultra-low latency and massive throughput simultaneously.

Quantum-Classical Hybrid Systems

Quantum computing won’t replace classical AI hardware but will augment it for specific optimization problems. Quantum algorithms for molecular simulation, optimization, and certain machine learning tasks demonstrate speedups impossible on classical hardware.

Hybrid systems combining quantum processors with classical AI accelerators will tackle problems beyond current capabilities. Drug discovery, materials science, and financial optimization represent early application areas.

Neuromorphic Computing

Brain-inspired neuromorphic chips like Intel’s Loihi 2 and IBM’s NorthPole process information fundamentally differently than conventional accelerators. These systems excel at continuous learning, temporal pattern recognition, and ultra-efficient inference.

As neuromorphic software ecosystems mature, these architectures may enable always-on AI applications in power-constrained environments from wearables to space systems.

3D Chip Stacking and Advanced Packaging

Three-dimensional chip stacking places memory directly atop computational cores, dramatically reducing latency and increasing bandwidth. This architecture eliminates the chip-to-chip communication bottleneck limiting current accelerators.

Advanced packaging technologies enable heterogeneous integration combining different chip technologies optimally. Future AI systems will integrate specialized processors, high-bandwidth memory, and optical interconnects in single packages.

Conclusion: Making Informed Hardware Choices

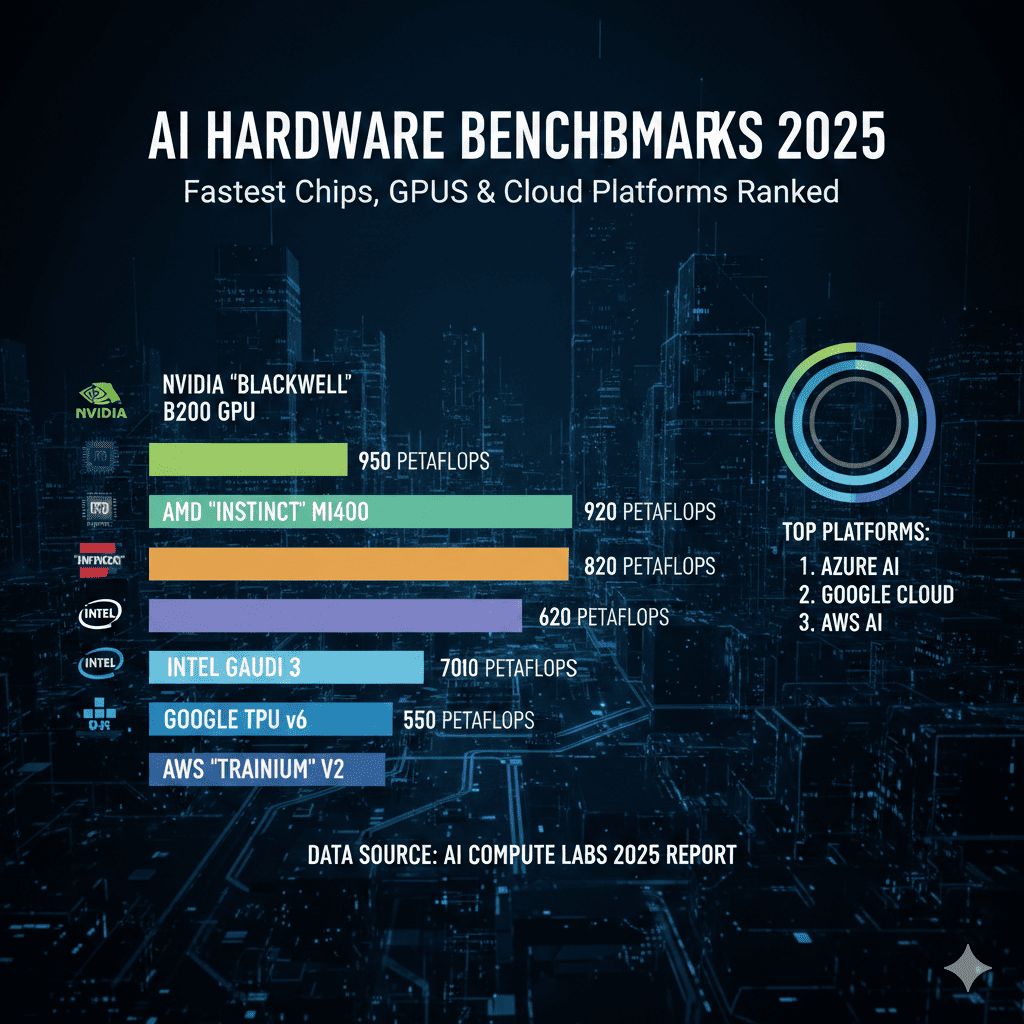

The landscape of AI hardware benchmarks in 2025 reveals a vibrant, rapidly evolving ecosystem with options suited to virtually any requirement and budget. NVIDIA maintains performance leadership but faces increasingly capable competition from AMD, Intel, and cloud providers’ custom silicon.

For most organizations, the optimal hardware choice depends less on peak benchmark performance than on matching capabilities to specific requirements. Training massive models demands top-tier GPUs or TPUs despite high costs. Production inference often achieves better ROI with efficient mid-tier accelerators. Edge applications require specialized hardware balancing performance, power, and size.

Cloud platforms democratize access to cutting-edge hardware without capital investment, though sustained workloads may prove more economical on owned infrastructure. Hybrid strategies combining owned hardware for baseline capacity with cloud resources for peak demand often deliver optimal cost-efficiency.

Looking ahead, AI hardware will continue rapid advancement. Memory capacity and bandwidth will increasingly determine performance as model sizes grow. Competition across GPU vendors and cloud providers’ custom accelerators will drive innovation while improving price-performance ratios.

The organizations succeeding in AI will be those making informed hardware decisions based on rigorous workload analysis rather than chasing benchmark leadership. By understanding how AI hardware benchmarks translate to real-world performance for your specific applications, you can optimize infrastructure investments for maximum return.

Also read this:

How AI Is Transforming Healthcare in 2025: Diagnostics, Treatment & Patient Care Innovations

Small Business AI Guide 2025: Best Low-Budget AI Tools That Actually Work

Best Autonomous AI Agents for Business in 2026 (Real Workflow Tests & Performance Review)