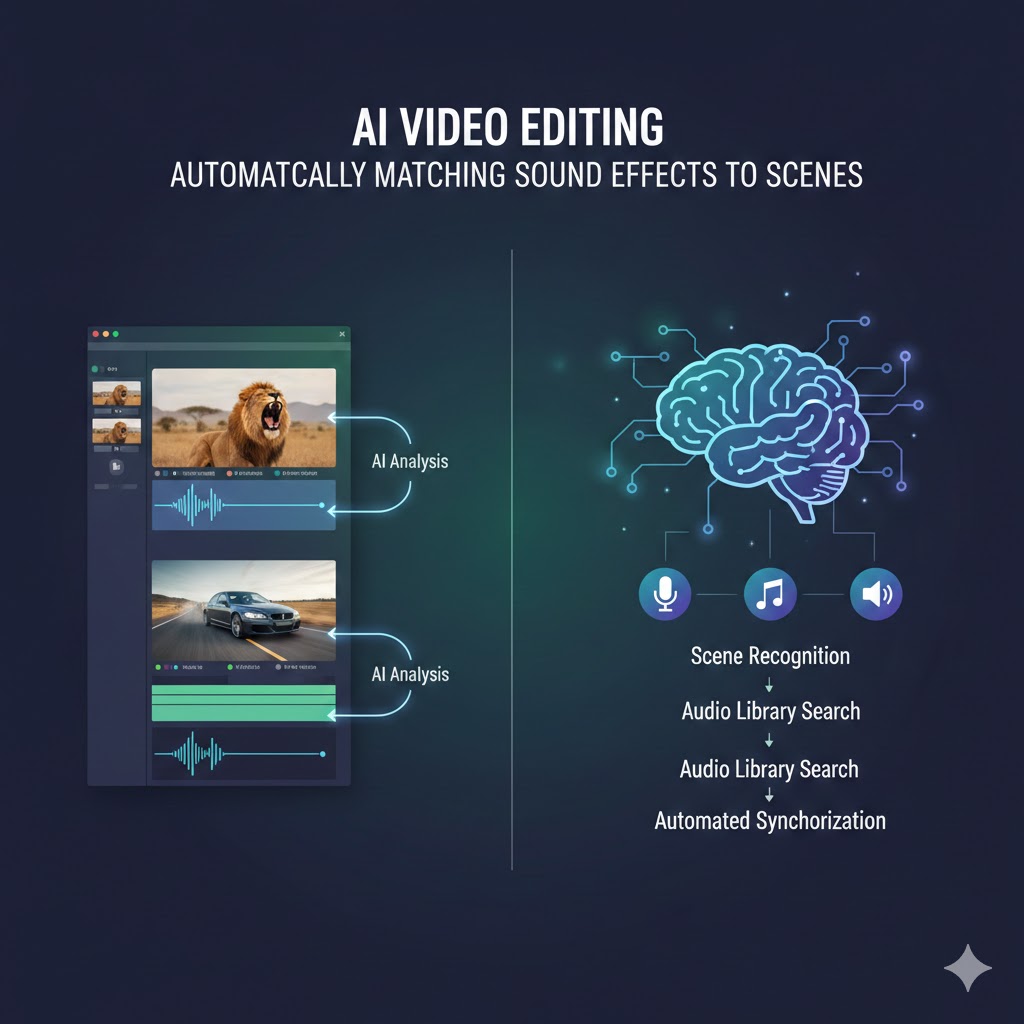

The landscape of video production has undergone a revolutionary transformation with the emergence of AI video editing technologies. Among the most groundbreaking innovations is the automatic matching of sound effects to visual scenes, a capability that’s reshaping how creators approach post-production. This technology leverages artificial intelligence to analyze video content frame by frame, identifying key moments and seamlessly integrating appropriate audio elements without manual intervention.

AI video editing has democratized professional-quality sound design, making it accessible to creators at every skill level. What once required hours of meticulous work by sound engineers can now be accomplished in minutes, allowing filmmakers, content creators, and marketers to focus on storytelling rather than technical minutiae. The integration of machine learning algorithms has enabled systems to understand context, emotion, and timing with remarkable precision.

1. Understanding AI-Powered Sound Effect Matching

The foundation of automatic sound effect matching lies in sophisticated computer vision and audio analysis algorithms. These systems employ deep learning models trained on millions of video clips and corresponding audio tracks, enabling them to recognize patterns and make intelligent decisions about sound placement.

How AI Analyzes Visual Content

AI video editing platforms utilize convolutional neural networks to break down video footage into discrete elements. The technology identifies objects, actions, movements, and environmental conditions within each frame. For instance, when the AI detects a door closing, footsteps on pavement, or waves crashing on a shore, it catalogs these visual cues and prepares to match them with appropriate audio.

The analysis extends beyond simple object recognition. Advanced systems evaluate motion vectors, scene transitions, lighting changes, and even facial expressions to determine the emotional tone and pacing requirements. This comprehensive understanding allows the AI to select sound effects that not only match the action but enhance the overall narrative impact.

Audio Library Integration and Selection

Modern AI video editing systems connect to extensive sound effect libraries containing thousands of categorized audio clips. These libraries are meticulously organized with metadata tags describing each sound’s characteristics, duration, intensity, and contextual usage. The AI cross-references its visual analysis with this metadata to identify the most suitable matches.

The selection process considers multiple factors simultaneously. Beyond basic compatibility, the system evaluates audio quality, stereo imaging, frequency content, and how well a particular sound effect will blend with existing audio elements like dialogue or music. Machine learning algorithms continuously improve these selections based on user feedback and professional editing standards.

Temporal Synchronization Technology

Precise timing represents one of the most critical aspects of sound design. AI video editing platforms employ sophisticated temporal analysis to ensure sound effects trigger at exactly the right moment. The technology measures frame rates, identifies action peaks, and calculates optimal sync points down to the millisecond.

This synchronization extends to duration matching as well. If a visual action lasts three seconds, the AI can stretch, compress, or layer sound effects to maintain perfect alignment throughout the event. Advanced systems even account for anticipation and decay, adding subtle audio cues before and after the primary action to create more realistic and immersive soundscapes.

2. Types of Scenes That Benefit Most from AI Sound Matching

Different genres and scene types present unique challenges and opportunities for automated sound design. Understanding where AI video editing excels helps creators leverage these tools most effectively.

Action and Movement Sequences

High-energy scenes featuring physical activity benefit tremendously from automated sound matching. Chase sequences, fight choreography, and sports footage contain numerous discrete actions that require individual sound effects. AI systems excel at tracking fast-moving subjects and layering multiple audio elements simultaneously.

The technology handles complex scenarios where multiple sound-generating actions occur concurrently. In a parkour sequence, for example, the AI video editing system might add footsteps, clothing rustles, breathing sounds, impact noises, and environmental ambiance all properly synchronized to the athlete’s movements. This level of detail would consume hours if added manually.

Environmental and Atmospheric Scenes

Nature documentaries, establishing shots, and ambient sequences require subtle layering of background sounds. AI video editing platforms analyze environmental elements like weather conditions, vegetation, water features, and wildlife to build rich atmospheric soundscapes. The systems recognize that a forest scene needs not just bird calls but also wind through leaves, distant animal sounds, and the acoustic character of an outdoor space.

The AI adjusts sound selection based on time of day, season, and location context when such information is available from metadata or visual cues. A beach scene at sunset receives different audio treatment than the same location at midday, with the system selecting appropriate wave intensities, bird species, and human activity levels.

Interior and Domestic Environments

Everyday indoor scenes contain countless opportunities for sound enhancement. Kitchen sequences, office environments, and home interiors feature numerous small actions that contribute to realism. AI video editing technology identifies objects like doors, appliances, furniture, and electronic devices, then adds corresponding sounds for interactions with these items.

The systems understand spatial acoustics, adjusting reverb and echo characteristics based on apparent room size and surface materials. A conversation in a bathroom receives different acoustic treatment than dialogue in a carpeted bedroom, creating more believable audio environments.

Transportation and Vehicle Scenes

Automotive footage, aviation sequences, and public transportation scenes present specific audio requirements. AI video editing platforms recognize vehicle types, movement speeds, and operational states to select appropriate engine sounds, mechanical noises, and transportation ambiance. The technology distinguishes between interior and exterior perspectives, adjusting audio accordingly.

Advanced systems track acceleration, deceleration, and gear changes in vehicle footage, modulating engine sounds to match visual cues. When a car rounds a corner, the AI might add tire squeal at appropriate intensity based on the apparent speed and turning radius visible in the footage.

3. Technical Components Behind Automated Sound Matching

The technological infrastructure supporting AI video editing for sound design combines multiple specialized systems working in concert. Understanding these components reveals the sophistication behind seemingly simple automated results.

Computer Vision and Object Recognition

Deep learning models trained on massive image datasets form the visual analysis foundation. These neural networks categorize objects, recognize actions, and track movements across frames. The systems employ techniques like semantic segmentation to understand not just what objects exist in a scene but how they relate spatially and functionally.

AI video editing platforms often utilize ensemble methods, combining multiple recognition models to improve accuracy. One network might specialize in human activity recognition while another focuses on environmental elements. The combined output provides comprehensive scene understanding that informs sound selection.

Natural Language Processing for Context

Advanced systems incorporate NLP techniques to analyze video titles, descriptions, tags, and even subtitles or dialogue when available. This textual context helps the AI video editing system understand the intended mood, genre, and narrative purpose of scenes. A horror film requires different sound design choices than a comedy, even when depicting similar actions.

The NLP components also enable creator control through natural language commands. Users can instruct the system with phrases like “make the footsteps more menacing” or “reduce background ambiance,” and the AI adjusts its sound selection accordingly.

Audio Signal Processing

Once sound effects are selected, sophisticated digital signal processing ensures seamless integration with existing audio. AI video editing systems analyze frequency content, dynamic range, and spectral characteristics of both the new sound effects and pre-existing audio tracks. Automatic equalization, compression, and spatial positioning create cohesive soundscapes.

The processing includes intelligent ducking, where sound effects automatically reduce in volume when dialogue occurs, ensuring speech remains intelligible. Crossfading, noise reduction, and harmonic matching prevent jarring transitions between audio elements.

Machine Learning and Continuous Improvement

The most powerful AI video editing platforms employ reinforcement learning, improving their performance over time. When users accept, modify, or reject AI-generated sound placements, the system learns from these decisions. Pattern recognition algorithms identify which selections receive positive reinforcement and adjust future recommendations accordingly.

Transfer learning allows these systems to apply knowledge gained from one genre or scene type to novel situations. An AI trained primarily on action films can apply relevant principles when processing a dramatic scene with similar visual elements, adapting its approach based on contextual differences.

4. Advantages of AI-Driven Sound Design

The adoption of AI video editing for automatic sound matching delivers numerous tangible benefits that extend beyond simple time savings. These advantages are transforming professional workflows and enabling new creative possibilities.

Dramatic Time and Cost Reduction

Traditional sound design requires frame-by-frame review, manual searching through sound libraries, precise placement of each effect, and extensive tweaking to achieve proper synchronization. This process can consume several hours per minute of finished video. AI video editing systems accomplish the same work in minutes, reducing post-production timelines dramatically.

The cost implications are significant, particularly for productions with limited budgets. Independent creators, small marketing teams, and educational content producers can achieve professional sound quality without hiring specialized sound designers. Even large studios benefit from reduced labor costs and faster project turnaround.

Consistency Across Projects

Human sound designers, despite their expertise, introduce subtle variations in their work based on fatigue, mood, and personal preferences. AI video editing systems maintain consistent quality and style across unlimited footage. This consistency proves particularly valuable for serialized content, brand videos, and projects involving multiple editors.

The technology ensures no moments are overlooked. Every door close, every footstep, and every environmental transition receives appropriate audio treatment. Manual editing sometimes leaves gaps due to oversight or time constraints, but AI systems methodically process every frame.

Accessibility for Non-Technical Creators

Sound design has traditionally required specialized knowledge of audio engineering, acoustics, and editing software. AI video editing removes these barriers, enabling creators with no technical audio background to produce professionally-sound videos. The democratization of these capabilities has unleashed creativity across platforms like YouTube, TikTok, and Instagram.

Educational content creators, nonprofit organizations, and small business owners can now compete with well-funded productions in terms of audio quality. The technology levels the playing field, allowing compelling content to succeed based on storytelling merit rather than production budget.

Enhanced Creative Experimentation

When sound design becomes quick and effortless, creators can experiment more freely. Testing different audio approaches no longer requires significant time investment. AI video editing enables rapid iteration, letting filmmakers try multiple sound design variations and select the most effective approach.

This experimentation extends to stylistic choices. Creators can easily generate realistic, exaggerated, or stylized sound designs, comparing different aesthetic approaches to determine what best serves their vision. The reduced friction in the creative process encourages bolder artistic decisions.

5. Current Limitations and Challenges

Despite remarkable capabilities, AI video editing for automatic sound matching still faces constraints that creators should understand. Recognizing these limitations helps set appropriate expectations and informs strategic tool usage.

Context and Nuance Interpretation

While AI excels at recognizing obvious actions and objects, subtle contextual understanding remains challenging. A person picking up an object might require different sounds depending on whether they’re being careful, rushed, angry, or playful. AI video editing systems sometimes miss these emotional nuances, selecting technically correct but tonally inappropriate sound effects.

Genre conventions and stylistic choices also present difficulties. Horror films often exaggerate mundane sounds for tension, while comedies might use cartoonish effects for humor. Training AI to recognize and respect these genre-specific approaches requires extensive specialized datasets and remains an active area of development.

Complex Audio Layering

Professional sound design often involves dozens of layered effects creating rich, multidimensional audio environments. While AI video editing platforms can add multiple sounds simultaneously, achieving the depth and complexity of expert human work remains difficult. Subtle background layers, room tone variations, and sophisticated spatial audio design still benefit from human expertise.

The AI sometimes creates cluttered soundscapes by adding too many elements or fails to establish proper hierarchical relationships where certain sounds should dominate while others recede. Balancing foreground, midground, and background audio elements requires aesthetic judgment that current systems only partially replicate.

Unusual or Specialized Content

AI video editing systems train on common scenarios and mainstream content. Footage depicting unusual situations, historical periods, fantasy elements, or highly specialized technical subjects may confuse the AI. A medieval blacksmithing scene or futuristic sci-fi environment lacks extensive training examples, leading to less accurate sound selections.

Cultural specificity also challenges these systems. Sound design conventions vary across regions and cultures, but most AI platforms train predominantly on Western media. Content targeting specific cultural contexts may require manual adjustment to meet audience expectations.

Technical Audio Quality Variations

While AI excels at selecting and placing sounds, ensuring consistent technical quality across all elements requires sophisticated processing. Variations in recording quality, noise floors, and frequency response within sound effect libraries can create inconsistencies. AI video editing platforms must constantly evolve their normalization and processing algorithms to address these challenges.

The systems also sometimes struggle with proper spatial audio placement and movement. Panning sounds across the stereo field to match on-screen movement requires precise analysis that current technologies don’t always execute perfectly.

6. Leading AI Video Editing Platforms and Tools

The market for AI video editing solutions has expanded rapidly, with numerous platforms offering automated sound matching capabilities. Understanding the options helps creators select tools matching their needs and workflows.

Cloud-Based Solutions

Web-based AI video editing platforms offer the advantage of requiring no software installation and providing access to powerful processing capabilities regardless of local hardware. These services typically operate on subscription models with tiered pricing based on usage volume and feature access.

Leading cloud platforms integrate vast sound effect libraries directly into their interfaces, allowing seamless selection and application. The systems continuously update their AI models server-side, ensuring users always access the latest capabilities without manual updates. Collaborative features enable teams to work on projects simultaneously from different locations.

Desktop Software Integration

Established video editing applications increasingly incorporate AI video editing features as plugins or native capabilities. This integration allows creators to leverage AI sound matching while maintaining their familiar workflows and accessing other advanced editing tools within the same environment.

Desktop solutions often provide more granular control over AI suggestions, letting editors accept, modify, or reject automated sound placements individually. The systems typically cache AI-generated suggestions locally, enabling offline work after initial processing. Performance depends on local hardware capabilities, with powerful workstations providing faster results.

Mobile Applications

Smartphone-based AI video editing apps bring automated sound design to mobile creators. These applications optimize their AI models for mobile processors, balancing capability with performance and battery consumption. The tools particularly appeal to social media content creators who shoot and edit entirely on mobile devices.

Mobile platforms typically feature simplified interfaces with automated workflows requiring minimal user input. The apps analyze footage, apply sound effects, and render final videos with minimal configuration. While less powerful than desktop solutions, mobile AI video editing tools provide remarkable capabilities for their form factor.

Specialized Sound Design Tools

Some platforms focus specifically on audio post-production rather than comprehensive video editing. These specialized AI video editing solutions offer deeper sound design capabilities including advanced mixing, mastering, and spatial audio features. They integrate with standard video editing software through export and import workflows.

Specialized tools often provide larger sound effect libraries with more detailed categorization and searching capabilities. The platforms may offer industry-specific sound collections for genres like gaming, corporate video, documentaries, or film production.

7. Best Practices for Working with AI Sound Matching

Maximizing the benefits of AI video editing for sound design requires understanding how to work effectively with these systems. Strategic approaches enhance results and maintain creative control.

Providing Quality Source Material

AI performance correlates directly with input video quality. Clean footage with good lighting, stable camera work, and clear subject visibility enables more accurate analysis. When the AI video editing system can clearly identify objects and actions, sound matching improves significantly. Avoiding excessive motion blur, maintaining focus, and using adequate resolution all contribute to better automated results.

Organizing footage with descriptive file names, tags, and metadata also helps AI systems understand context. Including information about location, scene purpose, and intended mood guides the technology toward appropriate sound selections even before visual analysis begins.

Reviewing and Refining AI Suggestions

While AI video editing produces impressive automated results, treating AI output as a starting point rather than a final product elevates quality. Reviewing each AI-placed sound effect and making selective adjustments ensures the audio perfectly matches creative vision. Most platforms provide easy interfaces for adjusting timing, volume, and sound selection.

Common refinements include removing redundant sounds where the AI over-applied effects, adjusting volumes to create proper hierarchical relationships, and swapping AI-selected sounds for alternatives that better match the intended tone. This hybrid approach combines AI efficiency with human judgment.

Establishing Clear Creative Direction

Many AI video editing platforms allow users to set parameters guiding the AI’s aesthetic choices. Specifying whether you want realistic, exaggerated, or minimalist sound design helps the system make appropriate selections. Genre tags, mood descriptors, and reference examples provide valuable context.

Creating and saving custom presets for recurring project types streamlines workflows. A creator producing weekly vlogs might establish a consistent sound design style that the AI applies automatically, while someone producing varied content adjusts settings per project.

Balancing Automation with Manual Control

Strategic use of AI video editing involves identifying which tasks benefit most from automation and where human expertise adds value. Routine sound effects like footsteps, door sounds, and environmental ambiance work well with full automation. Emotionally critical moments, unique creative choices, and genre-defining audio elements may warrant manual attention.

Some editors use AI to quickly populate a rough sound design, then focus their limited time refining the most important sequences. This approach delivers production efficiency while maintaining quality where it matters most.

8. The Future of AI Sound Design Technology

The trajectory of AI video editing for sound matching points toward increasingly sophisticated capabilities that will further transform content creation workflows and possibilities.

Real-Time Processing Capabilities

Emerging technologies enable AI video editing systems to analyze and apply sound effects in real-time during video playback or even during recording. This advancement would allow creators to hear fully sound-designed sequences immediately after shooting, enabling on-set creative decisions based on complete audiovisual presentation.

Real-time capabilities also enable interactive applications where sound design adapts dynamically to user actions in gaming, virtual reality, or interactive video experiences. The AI continuously analyzes changing visual conditions and updates audio accordingly without noticeable latency.

Emotion and Intent Recognition

Next-generation AI video editing platforms will incorporate advanced emotion recognition analyzing facial expressions, body language, and scene context to understand intended emotional impact. The systems will select sound effects not just matching actions but reinforcing emotional storytelling.

Intent recognition will enable AI to distinguish between similar actions with different narrative purposes. The sound of a door closing when someone leaves in anger will differ from someone departing peacefully, with the AI automatically making these nuanced distinctions.

Personalized Sound Design Styles

Machine learning advances will enable AI video editing systems to learn individual creator preferences, developing personalized sound design profiles. After analyzing a creator’s editing patterns and manual adjustments, the AI will automatically apply that creator’s unique aesthetic approach to new projects.

This personalization extends to audience preferences, with systems potentially adapting sound design based on demographic data or user feedback. Content might feature different audio treatments depending on viewer preferences, all generated automatically from a single master edit.

Integration with Other AI Editing Functions

The future of AI video editing involves comprehensive integration where sound matching works seamlessly with automated color grading, scene detection, pacing optimization, and even content generation. These systems will handle complete post-production workflows, requiring human input primarily for creative direction and final approval.

Collaborative AI editing will enable natural language control where creators describe desired outcomes conversationally, and the system implements comprehensive changes across multiple editing dimensions simultaneously. Instructions like “make this sequence more intense” would trigger coordinated adjustments to pacing, color, music, and sound effects.

Conclusion

AI video editing has fundamentally transformed the landscape of sound design, making professional-quality audio post-production accessible to creators at every level. The automatic matching of sound effects to visual scenes represents one of the most practical and immediately beneficial applications of artificial intelligence in content creation. By analyzing footage with sophisticated computer vision, accessing extensive sound libraries, and applying intelligent synchronization, these systems accomplish in minutes what once required hours of specialized labor.

The technology delivers compelling advantages including dramatic time savings, consistent quality, enhanced accessibility for non-technical creators, and increased creative experimentation. While limitations around contextual nuance, complex layering, and specialized content remain, ongoing developments continue narrowing these gaps. The emergence of cloud platforms, desktop integrations, mobile applications, and specialized tools provides options for diverse workflows and production contexts.

Successful implementation of AI video editing for sound design requires understanding best practices around source material quality, reviewing and refining AI suggestions, establishing creative direction, and strategically balancing automation with manual control. As the technology evolves toward real-time processing, emotion recognition, personalized styling, and comprehensive editing integration, the distinction between AI-assisted and human-created sound design will continue blurring.

Also read this:

How to Add AI Sound Effects to Any Video in Seconds: The Complete Creator Workflow Guide

Scalable Subscription Income Ideas That Grow Automatically: Build Your Recurring Revenue Empire

Best SaaS Affiliate Niches for Beginners and Experienced Marketers: Profitable Software Partnerships