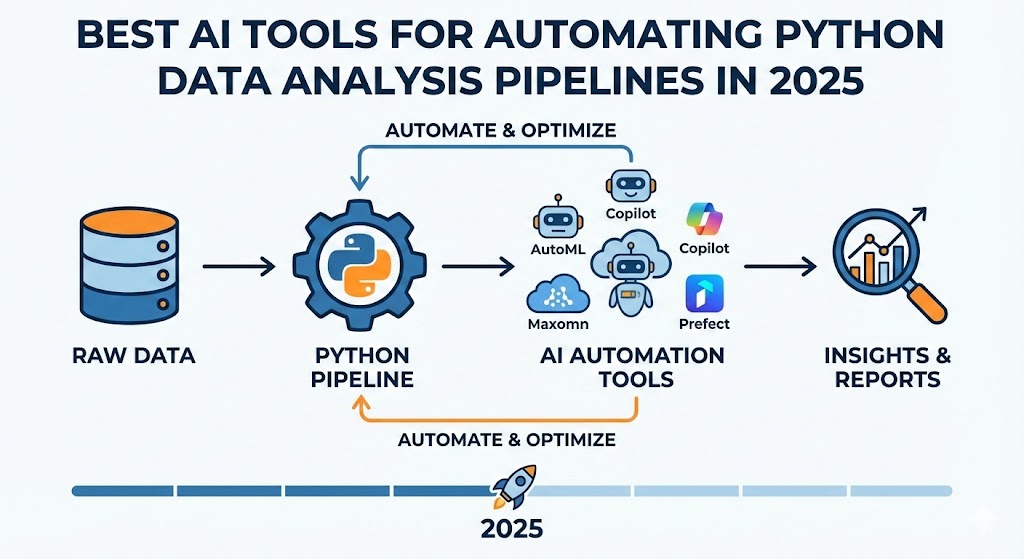

The landscape of data analysis has transformed dramatically with the emergence of artificial intelligence. Modern data professionals face mounting pressure to deliver insights faster while maintaining accuracy and scalability. AI Tools for Automating Python Data Analysis Pipelines have become indispensable assets for organizations seeking competitive advantages through data-driven decision-making.

Python remains the dominant language for data analysis, commanding over 60% market share among data scientists and analysts. However, building robust data pipelines traditionally requires significant manual effort, from data cleaning and transformation to model deployment and monitoring. This is where AI-powered automation tools step in, revolutionizing how we approach data workflows.

This comprehensive guide explores the most powerful AI Tools for Automating Python Data Analysis Pipelines available in 2025, examining their capabilities, use cases, and how they can transform your data operations.

1. GitHub Copilot for Data Science

GitHub Copilot has evolved from a general coding assistant into a specialized powerhouse for data analysis workflows. The 2025 version includes enhanced understanding of pandas, NumPy, and scikit-learn operations, making it one of the premier AI Tools for Automating Python Data Analysis Pipelines.

Key Features and Capabilities

The tool excels at generating boilerplate code for common data operations. When you start typing a function name or comment describing your intent, Copilot suggests complete implementations including error handling and edge case management. It understands context from your existing codebase, ensuring consistency in coding style and methodology.

Copilot’s autocomplete functionality now predicts entire data transformation sequences. If you’re working with time-series data, it recognizes patterns and suggests appropriate resampling, interpolation, and aggregation methods. The AI learns from millions of public repositories, incorporating best practices directly into your workflow.

Pipeline Automation Benefits

For pipeline automation, Copilot generates data validation checks automatically. It identifies potential null values, outliers, and data type inconsistencies before they cause downstream failures. The tool also suggests appropriate exception handling and logging mechanisms, crucial for production environments.

The 2025 version includes specialized features for ETL processes. It can generate complete extraction functions for various data sources including APIs, databases, and cloud storage. Transformation logic suggestions incorporate industry-standard cleaning techniques, while load operations include batch processing and error recovery mechanisms.

Integration and Workflow Enhancement

Copilot integrates seamlessly with Jupyter notebooks, VS Code, and PyCharm. It understands notebook cell dependencies and suggests code that maintains data pipeline integrity across cells. The tool also generates comprehensive docstrings and inline comments, improving code maintainability.

Real-world implementations show productivity gains of 40-55% for routine data pipeline tasks. Teams report faster onboarding for new members and reduced code review cycles due to consistent, well-documented code generation.

2. DataRobot AutoML Platform

DataRobot represents a comprehensive solution for automating the entire machine learning lifecycle within Python data pipelines. As one of the most mature AI Tools for Automating Python Data Analysis Pipelines, it handles everything from feature engineering to model deployment.

Automated Feature Engineering

DataRobot’s automated feature engineering capabilities analyze your raw data and generate hundreds of potential features. It applies mathematical transformations, creates interaction terms, and identifies temporal patterns without manual specification. The platform evaluates each feature’s predictive power and retains only those contributing to model performance.

The system handles categorical encoding intelligently, choosing between one-hot encoding, target encoding, or entity embedding based on cardinality and relationship to target variables. For text data, it automatically generates sentiment scores, topic models, and custom embeddings.

Model Selection and Optimization

The platform tests dozens of algorithms simultaneously, from gradient boosting machines to neural networks. It performs hyperparameter tuning using advanced optimization techniques including Bayesian optimization and genetic algorithms. Each model undergoes rigorous cross-validation, with results presented in intuitive dashboards.

DataRobot’s blueprint system shows exactly how each model processes data, enabling transparency and debugging. You can modify blueprints to enforce business rules or incorporate domain knowledge while maintaining automation benefits.

Python API and Pipeline Integration

The Python API allows seamless integration into existing data pipelines. You can trigger model training programmatically, retrieve predictions at scale, and monitor model performance in production. The API supports batch predictions, real-time scoring, and scheduled retraining.

Deployment options include REST APIs, Python scoring code, or container images. The platform handles version control, A/B testing, and champion-challenger frameworks automatically. Model monitoring features detect data drift and performance degradation, triggering alerts or automated retraining.

Enterprise-Grade Capabilities

DataRobot provides robust governance features including model documentation, bias detection, and explainability reports. It integrates with MLOps tools for comprehensive pipeline orchestration. The platform scales horizontally, handling datasets with billions of rows across distributed computing clusters.

3. PyCaret Low-Code ML Library

PyCaret revolutionizes machine learning automation through its low-code Python library. It serves as an excellent entry point among AI Tools for Automating Python Data Analysis Pipelines, offering enterprise-grade functionality with minimal code requirements.

Simplified Pipeline Creation

PyCaret reduces complex machine learning workflows to just a few lines of code. The setup function handles data preprocessing automatically, including missing value imputation, categorical encoding, feature scaling, and train-test splitting. You specify your target variable, and PyCaret configures the optimal preprocessing pipeline.

The compare_models function trains and evaluates multiple algorithms simultaneously, ranking them by performance metrics. This eliminates the tedious process of manually training and comparing models. Results display in sortable tables showing accuracy, precision, recall, F1 scores, and training time.

Automated Preprocessing and Transformation

PyCaret’s preprocessing capabilities adapt to your data characteristics. For numerical features, it automatically detects and handles outliers using multiple strategies. It identifies and transforms skewed distributions, applies power transforms where beneficial, and normalizes features appropriately.

The library handles imbalanced datasets through automated oversampling, undersampling, or synthetic data generation. It creates polynomial features, interaction terms, and binned features based on data distribution analysis. All transformations maintain proper separation between training and test data, preventing data leakage.

Model Optimization and Tuning

The tune_model function performs hyperparameter optimization using predefined search spaces optimized for each algorithm. It uses random search, grid search, or Bayesian optimization depending on the parameter space complexity. The ensemble function creates blended models or stacking ensembles automatically, often improving performance beyond individual models.

PyCaret includes automated feature selection using multiple methods including recursive feature elimination, feature importance, and statistical tests. This reduces dimensionality while maintaining or improving model performance.

Deployment and Production Features

The finalize_model function trains on the entire dataset using optimal parameters discovered during tuning. PyCaret saves complete pipelines including preprocessing steps and trained models as pickle files. The predict_model function ensures new data undergoes identical preprocessing before prediction.

The library generates model interpretation reports automatically, including feature importance plots, SHAP values, and partial dependence plots. These explanations support stakeholder communication and model validation processes.

4. Kedro Data Pipeline Framework

Kedro stands out among AI Tools for Automating Python Data Analysis Pipelines by providing a structured framework for building production-ready data science code. Developed by QuantumBlack Labs, it enforces software engineering best practices in data projects.

Pipeline Orchestration and Modularity

Kedro organizes data pipelines into nodes and edges, creating directed acyclic graphs that define data flow. Each node represents a discrete data processing step with clear inputs and outputs. This modular architecture enables parallel execution, easier debugging, and component reusability across projects.

The framework automatically resolves dependencies between pipeline steps, executing tasks in the correct order. It caches intermediate results, avoiding redundant computations when pipeline components haven’t changed. This significantly reduces development iteration time.

Data Catalog and Version Control

Kedro’s data catalog abstracts data sources and formats from pipeline logic. You define datasets once in YAML configuration files, specifying location, format, and credentials. Pipeline code references datasets by name, making it simple to swap data sources between development, testing, and production environments.

The framework supports numerous data formats including CSV, Parquet, HDF5, SQL databases, and cloud storage services. It handles data serialization and deserialization automatically, allowing focus on transformation logic rather than I/O operations.

Configuration Management

Kedro implements sophisticated configuration management through YAML files. You can define environment-specific parameters, credentials, and settings without modifying code. The framework supports configuration layering, allowing base configurations with environment-specific overrides.

This separation of code and configuration enhances security by keeping sensitive credentials out of version control. It also simplifies A/B testing and experimentation by enabling parameter changes without code modifications.

Experiment Tracking and Reproducibility

Integration with MLflow and other experiment tracking tools allows comprehensive logging of model parameters, metrics, and artifacts. Kedro pipelines are inherently reproducible, with explicit dependencies and versioned datasets ensuring consistent results across runs.

The framework generates automatic documentation showing pipeline structure, node descriptions, and data lineage. This documentation updates automatically as pipelines evolve, maintaining accuracy without manual effort.

Production Deployment

Kedro projects package into Docker containers, Airflow DAGs, or AWS Lambda functions seamlessly. The framework’s structure translates naturally to production orchestration tools. It includes hooks for adding monitoring, logging, and alerting without cluttering pipeline code.

5. Apache Airflow with AI Extensions

Apache Airflow has become the industry standard for workflow orchestration, and recent AI extensions make it one of the most powerful AI Tools for Automating Python Data Analysis Pipelines. The 2025 ecosystem includes intelligent scheduling, anomaly detection, and self-healing capabilities.

Dynamic Pipeline Generation

Airflow’s DAG generation now incorporates AI to optimize task scheduling based on historical execution patterns. The system learns task dependencies, execution times, and resource requirements, automatically adjusting parallelism and resource allocation for optimal throughput.

Smart operators detect data schema changes and adapt downstream tasks automatically. If a source system modifies column names or data types, Airflow identifies affected tasks and suggests or implements remediation strategies.

Intelligent Monitoring and Alerting

AI-powered monitoring analyzes task execution metrics to establish baseline performance. The system detects anomalies in execution time, resource consumption, or data quality, alerting teams before issues impact downstream processes. Machine learning models predict potential failures based on system metrics and external factors.

The platform implements smart retry logic that adjusts retry strategies based on failure patterns. Transient network issues trigger immediate retries, while data quality problems initiate diagnostic workflows and notify responsible teams.

Resource Optimization

Airflow’s AI extensions optimize cluster resource allocation dynamically. The system predicts resource needs based on data volume, historical patterns, and scheduled workloads. It scales worker pools proactively, preventing bottlenecks during peak periods while minimizing costs during low activity.

Task prioritization algorithms ensure critical pipelines receive resources first. The system balances throughput, latency requirements, and resource constraints to maximize overall data platform efficiency.

Data Quality and Validation

Integrated data quality checks leverage AI to detect anomalies in pipeline outputs. Statistical models learn normal data distributions and flag deviations requiring investigation. The system generates data quality reports automatically, tracking metrics over time and identifying degradation trends.

Validation rules adapt based on data evolution, reducing false positives while maintaining sensitivity to genuine issues. The platform suggests new validation rules by analyzing historical failures and near-misses.

Integration Ecosystem

Airflow connects with hundreds of data sources, processing engines, and storage systems through its extensive provider ecosystem. Native integrations with Spark, Kubernetes, AWS, GCP, and Azure enable comprehensive data pipeline coverage. The platform supports custom operators for proprietary systems.

6. Metaflow by Netflix

Metaflow emerged from Netflix’s data science infrastructure and ranks among the elite AI Tools for Automating Python Data Analysis Pipelines. It focuses on making data scientists productive by handling infrastructure complexity automatically.

Seamless Scalability

Metaflow allows you to develop pipelines locally and scale to cloud computing resources without code changes. Decorators specify compute requirements, and the framework provisions appropriate resources automatically. You can run small experiments on your laptop and production workflows on GPU clusters using identical code.

The framework manages data transfer between local and remote environments transparently. It handles serialization of Python objects, ensuring your data structures work consistently across execution contexts.

Built-in Versioning and Reproducibility

Every Metaflow run receives a unique identifier and automatic versioning. The framework stores code snapshots, input data checksums, and execution parameters for each run. You can retrieve any previous result or recreate any historical run exactly.

The system tracks data lineage automatically, showing which data artifacts generated which outputs. This enables impact analysis when data sources change and supports regulatory compliance requirements.

Easy Parallel Processing

Metaflow’s foreach decorator enables parallel processing with minimal code changes. The framework distributes independent computations across available resources automatically. It handles result aggregation, error handling, and partial failure recovery without explicit programming.

You can parallelize hyperparameter searches, cross-validation folds, or data partitions effortlessly. The framework optimizes execution plans based on resource availability and task dependencies.

Production Deployment Simplified

Metaflow integrates with AWS Step Functions and Kubernetes for production deployment. The same Python code that runs on your laptop executes in production environments with robust error handling and monitoring. The framework generates deployment artifacts automatically, including infrastructure as code templates.

Scheduling integrates with existing tools or uses Metaflow’s native capabilities. The platform supports event-triggered workflows, enabling reactive data pipelines that respond to new data arrivals or external signals.

Data Science Focus

Unlike general workflow tools, Metaflow optimizes for data science workflows specifically. It handles large datasets efficiently, supports GPU acceleration natively, and integrates with popular ML libraries seamlessly. The framework includes utilities for experiment tracking, model evaluation, and result visualization.

7. Prefect Modern Workflow Orchestration

Prefect represents next-generation workflow orchestration, positioning itself as one of the most developer-friendly AI Tools for Automating Python Data Analysis Pipelines. Its Python-native design and cloud-native architecture address limitations of traditional orchestration tools.

Dynamic and Flexible Workflows

Prefect workflows are pure Python functions, making them testable, debuggable, and maintainable with standard development tools. The framework supports dynamic workflows that adapt structure based on runtime conditions. You can generate tasks conditionally, loop over variable-length datasets, or implement complex branching logic naturally.

The platform handles state management automatically, tracking task execution status and managing retries without boilerplate code. Workflows pause and resume gracefully, recovering from infrastructure failures without data loss.

Hybrid Execution Model

Prefect separates orchestration from execution, allowing you to run workflows anywhere. The control plane coordinates execution while compute happens in your infrastructure. This hybrid model maintains security and compliance while leveraging Prefect’s orchestration capabilities.

You can execute tasks locally, in Docker containers, on Kubernetes clusters, or across multiple cloud providers. The platform handles authentication, networking, and resource provisioning automatically.

Intelligent Scheduling and Triggers

Prefect’s scheduling system supports complex patterns including cron schedules, interval-based execution, and custom calendar logic. Workflows can trigger based on external events, data arrival, or completion of upstream pipelines.

The platform implements smart batching for high-frequency events, reducing overhead while maintaining responsiveness. It supports backfilling automatically, executing workflows for historical dates with proper date-aware logic.

Observability and Debugging

Comprehensive logging captures workflow execution details without manual instrumentation. The UI displays task states, execution times, and dependencies visually. You can inspect task inputs, outputs, and intermediate states for any run.

The platform retains execution history, enabling trend analysis and performance optimization. Alerts trigger based on workflow status, execution duration, or custom conditions. Integration with monitoring tools provides end-to-end observability.

Parametrization and Testing

Prefect workflows accept parameters cleanly, enabling reusable pipeline templates. You can define default values, validation rules, and parameter descriptions that appear in the UI. This supports self-service data pipelines where analysts provide inputs through a user interface.

The framework supports unit testing and integration testing naturally. You can mock external dependencies, provide test data, and verify workflow behavior using standard Python testing frameworks.

8. Great Expectations Data Quality Platform

Great Expectations focuses specifically on data quality, making it an essential component among AI Tools for Automating Python Data Analysis Pipelines. It helps teams maintain high-quality data through automated validation and documentation.

Expectation-Based Validation

Great Expectations introduces the concept of expectations, which are declarative statements about data properties. You specify that columns should be non-null, numeric values should fall within ranges, or categorical values should match predefined sets. The framework validates data against these expectations automatically.

The library includes over 300 built-in expectations covering common validation scenarios. You can create custom expectations for domain-specific requirements. Expectations combine into suites that validate entire datasets comprehensively.

Automated Documentation

Great Expectations generates data documentation automatically from expectations. The platform creates visual reports showing validation results, data distributions, and historical trends. These documents serve as living data contracts that stay synchronized with actual data characteristics.

The documentation includes summary statistics, sample data, and expectation validation results. Stakeholders can understand data quality without querying databases or reading code.

Profiling and Expectation Generation

The profiler analyzes datasets and suggests appropriate expectations automatically. It examines data types, distributions, relationships, and patterns to generate comprehensive expectation suites. This automated profiling accelerates initial setup and helps identify validation requirements you might overlook.

You can customize profiling behavior to emphasize specific data quality dimensions or ignore irrelevant attributes. The system learns from manual expectation modifications, improving future profiling accuracy.

Data Pipeline Integration

Great Expectations integrates with popular orchestration tools including Airflow, Prefect, and Kedro. Checkpoint objects encapsulate validation logic and integrate into pipeline steps cleanly. Validation results trigger alerts, halt pipelines, or activate remediation workflows based on configured actions.

The platform stores validation results in databases, enabling historical analysis and data quality dashboards. Integration with data catalogs maintains metadata synchronization across your data ecosystem.

Collaborative Features

Great Expectations supports team collaboration through shared expectation suites and validation results. Data producers and consumers can communicate about data quality expectations explicitly. The platform enables data contracts that formalize agreements between teams.

Version control integration tracks expectation changes over time, supporting impact analysis and auditing requirements. Teams can review and approve expectation modifications before deployment.

9. Deepnote Collaborative Data Platform

Deepnote reimagines the notebook experience with collaboration and automation features that make it valuable among AI Tools for Automating Python Data Analysis Pipelines. It combines interactive development with production pipeline capabilities.

Real-Time Collaboration

Multiple team members can work in the same notebook simultaneously, seeing changes in real-time. The platform handles cell execution coordination, preventing conflicts when multiple users run cells concurrently. Comments and discussions attach directly to code cells, maintaining context during reviews.

Version control integration tracks notebook changes automatically. You can compare versions, restore previous states, or merge changes from different branches. This brings software engineering practices to notebook-based development.

Automated Scheduling and Deployment

Deepnote notebooks can execute on schedules automatically, transforming them into production data pipelines. The platform handles dependency installation, environment configuration, and resource allocation. Notebooks access databases, APIs, and cloud storage securely without exposing credentials in code.

Parameterized notebooks accept inputs from external systems, enabling reusable analysis templates. You can trigger notebook execution via API calls, integrating with existing orchestration tools.

AI-Powered Code Assistance

Built-in AI assistance suggests code completions, fixes errors, and answers questions about data and code. The AI understands your data context, providing relevant suggestions based on DataFrame schemas and existing variables. It can generate exploratory analysis code, create visualizations, or explain complex operations.

The assistant helps with debugging by analyzing error messages and suggesting solutions. It can refactor code for better performance or readability, learning your coding style over time.

Integration and Data Connectivity

Deepnote connects to numerous data sources including PostgreSQL, MySQL, Snowflake, BigQuery, and S3. Connections configure once and remain available across notebooks. The platform handles authentication and query optimization automatically.

Integration with BI tools allows you to embed notebook outputs in dashboards. Results export to various formats including CSV, Excel, and Parquet. The platform supports dbt integration for transformation workflows.

Scalable Computing

Deepnote provides scalable compute resources on-demand. You can select machine sizes based on workload requirements, including GPU-enabled instances for machine learning tasks. The platform manages infrastructure provisioning and teardown automatically.

Resource usage monitoring prevents unexpected costs. The system suspends idle notebooks automatically and alerts you when approaching usage limits.

10. Claude and ChatGPT Code Interpreters

Large language model code interpreters represent a new category among AI Tools for Automating Python Data Analysis Pipelines. These tools combine natural language understanding with code execution capabilities, enabling conversational data analysis.

Natural Language to Code Translation

You can describe analysis goals in plain English, and the AI generates appropriate Python code. This dramatically lowers the barrier to data analysis for less technical users. The AI handles syntax details, library imports, and error correction automatically.

The models understand data context, asking clarifying questions when requirements are ambiguous. They suggest visualizations, statistical tests, and modeling approaches based on data characteristics and analysis objectives.

Iterative Analysis Development

Code interpreters support conversational refinement of analyses. You can request modifications like “add confidence intervals” or “color by category,” and the AI updates code accordingly. This interactive approach accelerates exploration and experimentation.

The systems maintain context across conversation turns, building complex analyses incrementally. They remember variable names, data transformations, and previous results, enabling coherent multi-step workflows.

Automated Debugging and Optimization

When code produces errors, the AI debugs automatically, analyzing error messages and fixing issues. It suggests performance optimizations for slow operations and identifies inefficient patterns. The models catch common mistakes like data leakage or incorrect joins before they cause problems.

Error explanations help users learn, describing why issues occurred and how fixes address them. This educational aspect helps team members develop their skills while completing immediate tasks.

Data Exploration and Insight Generation

Code interpreters can explore datasets autonomously, identifying interesting patterns, anomalies, or relationships. They generate summary statistics, create appropriate visualizations, and suggest hypotheses for further investigation. This automated exploration accelerates initial data understanding.

The AI produces reports combining code, visualizations, and narrative explanations. These reports document analysis processes thoroughly, supporting reproducibility and knowledge sharing.

Limitations and Best Practices

While powerful, these tools work best for exploratory analysis rather than production pipelines. They may generate inefficient code for large datasets or make statistical mistakes in complex scenarios. Human review remains essential for critical analyses.

Best practices include validating generated code before execution, testing on sample data first, and maintaining version control of finalized analyses. Combining AI assistance with traditional development practices yields optimal results.

Conclusion

The emergence of AI Tools for Automating Python Data Analysis Pipelines has fundamentally changed how organizations approach data work. These tools address different aspects of the pipeline lifecycle, from initial development through production deployment and monitoring.

GitHub Copilot and AI code assistants accelerate development by generating boilerplate code and suggesting implementations. AutoML platforms like DataRobot and PyCaret automate model selection and optimization. Framework tools including Kedro, Airflow, Metaflow, and Prefect provide robust orchestration and production deployment capabilities. Specialized tools like Great Expectations ensure data quality while collaboration platforms like Deepnote make teams more productive.

The most effective data organizations combine multiple tools, selecting the best solution for each pipeline stage. A typical modern stack might include Copilot for development, Kedro for pipeline structure, Great Expectations for validation, and Airflow for orchestration. This integrated approach maximizes automation benefits while maintaining flexibility and control.

As these AI Tools for Automating Python Data Analysis Pipelines continue evolving, we can expect deeper integration, more sophisticated automation, and expanded capabilities. The tools are becoming more intelligent, learning from usage patterns and suggesting optimizations proactively. They’re also becoming more accessible, enabling broader participation in data analysis while maintaining rigor and quality.

Investing time in learning these tools pays substantial dividends. Teams report productivity improvements of 30-60%, faster time-to-insight, and higher quality outputs. The initial learning curve is modest compared to long-term benefits, making now an excellent time to incorporate these tools into your data workflows.

The future of data analysis lies in intelligent automation that augments human capabilities rather than replacing them. By leveraging these powerful AI Tools for Automating Python Data Analysis Pipelines, you can focus on high-value activities like strategy, interpretation, and decision-making while automation handles repetitive tasks. This combination of human insight and machine efficiency represents the optimal approach for modern data-driven organizations.

Also read this:

Best AI Tools to Convert Images Into Studio-Quality Edits

Latest AI Tools Transforming Content Creation and Software Development

AI Tools for Marketing Automation That Increase Leads and Sales